5 Unheard Methods To achieve Higher Deepseek

페이지 정보

본문

I’ve tried the identical - with the identical outcomes - with Deepseek Coder and CodeLLaMA. We achieve the most vital boost with a mix of Free DeepSeek v3-coder-6.7B and the wonderful-tuning on the KExercises dataset, resulting in a pass charge of 55.28%. Fine-tuning on directions produced nice results on the opposite two base models as nicely. Now, let’s see what MoA has to say about one thing that has happened inside the final day or two… They advised a narrative of an organization that functioned extra like a research lab than a for-profit enterprise and was unencumbered by the hierarchical traditions of China’s excessive-pressure tech industry, even as it grew to become liable for what many investors see as the newest breakthrough in AI. However, it's not arduous to see the intent behind DeepSeek's fastidiously-curated refusals, and as thrilling because the open-source nature of Deepseek Online chat is, one ought to be cognizant that this bias can be propagated into any future fashions derived from it. That model (the one that really beats ChatGPT), nonetheless requires a massive amount of GPU compute.

I’ve tried the identical - with the identical outcomes - with Deepseek Coder and CodeLLaMA. We achieve the most vital boost with a mix of Free DeepSeek v3-coder-6.7B and the wonderful-tuning on the KExercises dataset, resulting in a pass charge of 55.28%. Fine-tuning on directions produced nice results on the opposite two base models as nicely. Now, let’s see what MoA has to say about one thing that has happened inside the final day or two… They advised a narrative of an organization that functioned extra like a research lab than a for-profit enterprise and was unencumbered by the hierarchical traditions of China’s excessive-pressure tech industry, even as it grew to become liable for what many investors see as the newest breakthrough in AI. However, it's not arduous to see the intent behind DeepSeek's fastidiously-curated refusals, and as thrilling because the open-source nature of Deepseek Online chat is, one ought to be cognizant that this bias can be propagated into any future fashions derived from it. That model (the one that really beats ChatGPT), nonetheless requires a massive amount of GPU compute.

ChatGPT excels at chatty tasks, writing, and normal drawback-solving. The newest model (R1) was introduced on 20 Jan 2025, while many in the U.S. I also tried having it generate a simplified version of a bitmap-primarily based garbage collector I wrote in C for considered one of my previous little language projects, and while it could get began with that, it didn’t work in any respect, no quantity of prodding acquired it in the suitable direction, and each its feedback and its descriptions of the code were wildly off. The clean version of the KStack exhibits much better outcomes during superb-tuning, however the move fee is still lower than the one which we achieved with the KExercises dataset. It additionally calls into question the overall "cheap" narrative of DeepSeek, when it could not have been achieved without the prior expense and energy of OpenAI. Using an LLM allowed us to extract features across a big variety of languages, with relatively low effort. KStack - Kotlin massive language corpus. FP8-LM: Training FP8 giant language fashions. "Despite their apparent simplicity, these problems usually contain complicated solution strategies, making them glorious candidates for constructing proof information to improve theorem-proving capabilities in Large Language Models (LLMs)," the researchers write.

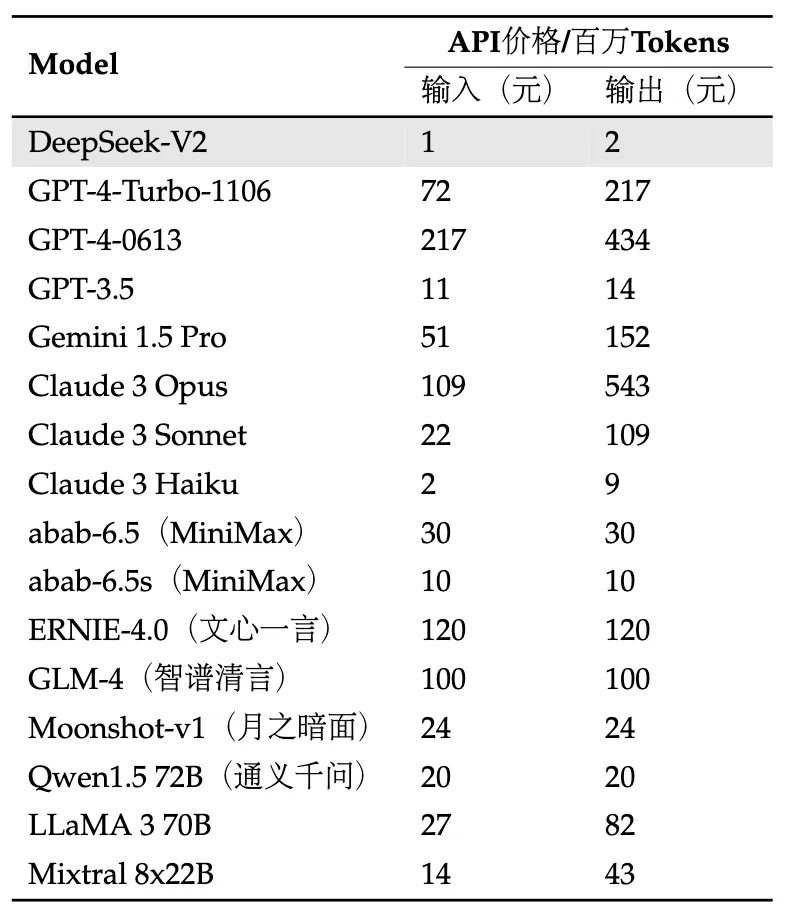

Behind the drama over DeepSeek v3’s technical capabilities is a debate inside the U.S. DeepSeek’s costs will probably be greater, notably for skilled and enterprise-stage users. 7.5 You conform to indemnify, defend, and hold us and our affiliates and licensors (if any) harmless against any liabilities, damages, and prices (together with cheap attorneys'charges) payable to a third get together arising out of a breach by you or any user of your account of those Terms, your violation of all relevant legal guidelines and rules or third social gathering rights, your fraud or different unlawful acts, or your intentional misconduct or gross negligence, to the extent permiteed by the applicable law. We'd like someone with a Radiation Detector, to head out onto the seashore at San DIego, and grab a reading of the radiation stage - especially close to the water. Right where the north Pacific Current would convey what was deep water up by Mendocino, into the shoreline area! "North Pacific Current." In truth, it makes Perfect sense. The efficiency of DeepSeek-Coder-V2 on math and code benchmarks. However, the Kotlin and JetBrains ecosystems can supply rather more to the language modeling and ML community, similar to learning from instruments like compilers or linters, extra code for datasets, and new benchmarks more relevant to day-to-day production growth tasks.

Note: All fashions are evaluated in a configuration that limits the output length to 8K. Benchmarks containing fewer than one thousand samples are examined a number of times utilizing various temperature settings to derive robust final outcomes. Though initially designed for Python, HumanEval has been translated into a number of programming languages. Good knowledge is the cornerstone of machine learning in any domain, programming languages included. So what are LLMs good for? The exams we implement are equivalent to the original HumanEval tests for Python, and we fix the prompt signatures to deal with the generic variable signature we describe above. All JetBrains HumanEval solutions and exams have been written by an expert competitive programmer with six years of experience in Kotlin and independently checked by a programmer with 4 years of experience in Kotlin. Another focus of our dataset improvement was the creation of the Kotlin dataset for instruct-tuning. How has DeepSeek affected international AI growth?

If you have any thoughts with regards to in which and how to use deepseek français, you can contact us at the web-page.

- 이전글Bar X Fruit Machine Online 25.03.20

- 다음글Pubic Unpleasant - Tips When Shaving 25.03.20

댓글목록

등록된 댓글이 없습니다.