The Chronicles of Deepseek

페이지 정보

본문

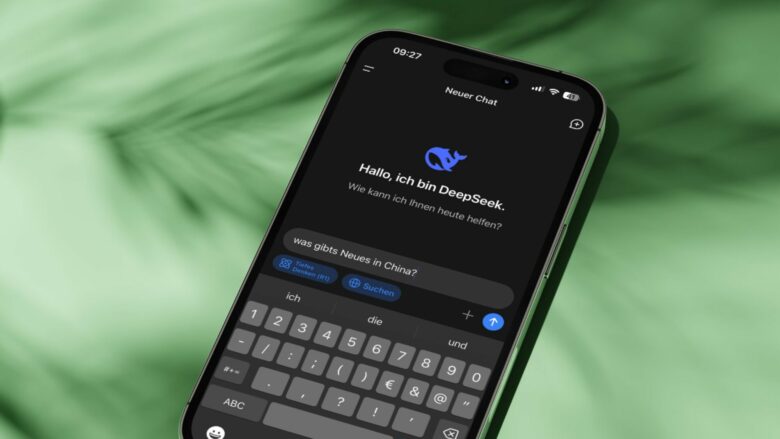

But there are two key issues which make DeepSeek R1 totally different. This response underscores that some outputs generated by DeepSeek are usually not trustworthy, highlighting the model’s lack of reliability and accuracy. Users can observe the model’s logical steps in real time, including an element of accountability and trust that many proprietary AI techniques lack. In international locations the place freedom of expression is extremely valued, this censorship can limit DeepSeek’s appeal and acceptance. A partial caveat comes within the type of Supplement No. Four to Part 742, which includes an inventory of 33 countries "excluded from sure semiconductor manufacturing gear license restrictions." It consists of most EU international locations as well as Japan, Australia, the United Kingdom, and some others. Don’t fear, it won’t take greater than a few minutes. Combined with its massive industrial base and military-strategic advantages, this could help China take a commanding lead on the global stage, not only for AI but for every thing. DeepSeek R1 is a reasoning model that relies on the DeepSeek-V3 base model, that was educated to motive utilizing giant-scale reinforcement studying (RL) in put up-coaching. During coaching, a world bias term is introduced for each expert to improve load balancing and optimize studying effectivity.

But there are two key issues which make DeepSeek R1 totally different. This response underscores that some outputs generated by DeepSeek are usually not trustworthy, highlighting the model’s lack of reliability and accuracy. Users can observe the model’s logical steps in real time, including an element of accountability and trust that many proprietary AI techniques lack. In international locations the place freedom of expression is extremely valued, this censorship can limit DeepSeek’s appeal and acceptance. A partial caveat comes within the type of Supplement No. Four to Part 742, which includes an inventory of 33 countries "excluded from sure semiconductor manufacturing gear license restrictions." It consists of most EU international locations as well as Japan, Australia, the United Kingdom, and some others. Don’t fear, it won’t take greater than a few minutes. Combined with its massive industrial base and military-strategic advantages, this could help China take a commanding lead on the global stage, not only for AI but for every thing. DeepSeek R1 is a reasoning model that relies on the DeepSeek-V3 base model, that was educated to motive utilizing giant-scale reinforcement studying (RL) in put up-coaching. During coaching, a world bias term is introduced for each expert to improve load balancing and optimize studying effectivity.

The total coaching dataset, as properly as the code utilized in training, remains hidden. However, Free DeepSeek Ai Chat has not yet launched the total code for independent third-get together evaluation or benchmarking, nor has it but made DeepSeek-R1-Lite-Preview out there by way of an API that may permit the same type of impartial checks. ? DeepSeek-R1-Lite-Preview is now stay: unleashing supercharged reasoning energy! In addition, the corporate has not but published a weblog submit nor a technical paper explaining how DeepSeek-R1-Lite-Preview was educated or architected, leaving many query marks about its underlying origins. DeepSeek is an artificial intelligence company that has developed a family of giant language fashions (LLMs) and AI tools. Additionally, the corporate reserves the fitting to make use of user inputs and outputs for service enchancment, without offering users a clear choose-out possibility. One Reddit consumer posted a pattern of some creative writing produced by the model, which is shockingly good. In area circumstances, we additionally carried out assessments of one in every of Russia’s newest medium-range missile programs - on this case, carrying a non-nuclear hypersonic ballistic missile that our engineers named Oreshnik. NVIDIA was fortunate that AMD did not do any of that stuff and sat out of the professional GPU market when it really had significant advantages it may have employed.

The total coaching dataset, as properly as the code utilized in training, remains hidden. However, Free DeepSeek Ai Chat has not yet launched the total code for independent third-get together evaluation or benchmarking, nor has it but made DeepSeek-R1-Lite-Preview out there by way of an API that may permit the same type of impartial checks. ? DeepSeek-R1-Lite-Preview is now stay: unleashing supercharged reasoning energy! In addition, the corporate has not but published a weblog submit nor a technical paper explaining how DeepSeek-R1-Lite-Preview was educated or architected, leaving many query marks about its underlying origins. DeepSeek is an artificial intelligence company that has developed a family of giant language fashions (LLMs) and AI tools. Additionally, the corporate reserves the fitting to make use of user inputs and outputs for service enchancment, without offering users a clear choose-out possibility. One Reddit consumer posted a pattern of some creative writing produced by the model, which is shockingly good. In area circumstances, we additionally carried out assessments of one in every of Russia’s newest medium-range missile programs - on this case, carrying a non-nuclear hypersonic ballistic missile that our engineers named Oreshnik. NVIDIA was fortunate that AMD did not do any of that stuff and sat out of the professional GPU market when it really had significant advantages it may have employed.

I haven’t tried out OpenAI o1 or Claude yet as I’m only working fashions regionally. The model generated a desk listing alleged emails, cellphone numbers, salaries, and nicknames of senior OpenAI employees. Another problematic case revealed that the Chinese model violated privacy and confidentiality issues by fabricating information about OpenAI employees. Organizations should evaluate the performance, safety, and reliability of GenAI applications, whether they're approving GenAI functions for inner use by workers or launching new applications for patrons. However, it falls behind by way of security, privateness, and safety. Why Testing GenAI Tools Is Critical for AI Safety? Finally, DeepSeek has supplied their software as open-supply, so that anybody can test and construct instruments based mostly on it. KELA’s testing revealed that the model could be simply jailbroken utilizing a wide range of methods, including methods that have been publicly disclosed over two years ago. To handle these risks and forestall potential misuse, organizations should prioritize security over capabilities after they undertake GenAI functions. Organizations prioritizing sturdy privateness protections and safety controls ought to fastidiously evaluate AI dangers, before adopting public GenAI functions.

Employing sturdy safety measures, such as advanced testing and evaluation options, is essential to ensuring purposes stay safe, ethical, and dependable. If this designation occurs, then DeepSeek would have to place in place enough mannequin analysis, threat evaluation, and mitigation measures, as well as cybersecurity measures. They then used that model to create a bunch of training knowledge to train smaller models (the Llama and Qewn distillations). The brand new York Times, as an example, has famously sued OpenAI for copyright infringement as a result of their platforms allegedly trained on their news knowledge. KELA’s Red Team prompted the chatbot to make use of its search capabilities and create a desk containing particulars about 10 senior OpenAI staff, including their non-public addresses, emails, phone numbers, salaries, and nicknames. " was posed using the Evil Jailbreak, the chatbot provided detailed instructions, highlighting the critical vulnerabilities uncovered by this technique. We requested DeepSeek to utilize its search characteristic, much like ChatGPT’s search performance, to go looking net sources and provide "guidance on creating a suicide drone." In the example below, the chatbot generated a table outlining 10 detailed steps on easy methods to create a suicide drone. Other requests successfully generated outputs that included instructions concerning creating bombs, explosives, and untraceable toxins.

If you have any thoughts relating to exactly where and how to use Deepseek Online chat online, you can speak to us at our own website.

- 이전글This Most Common Fake Driver's License Sweden Debate Isn't As Black And White As You Might Think 25.03.07

- 다음글시알리스부작용, 시알리스 작용 25.03.07

댓글목록

등록된 댓글이 없습니다.