The most Common Mistakes People Make With Deepseek

페이지 정보

본문

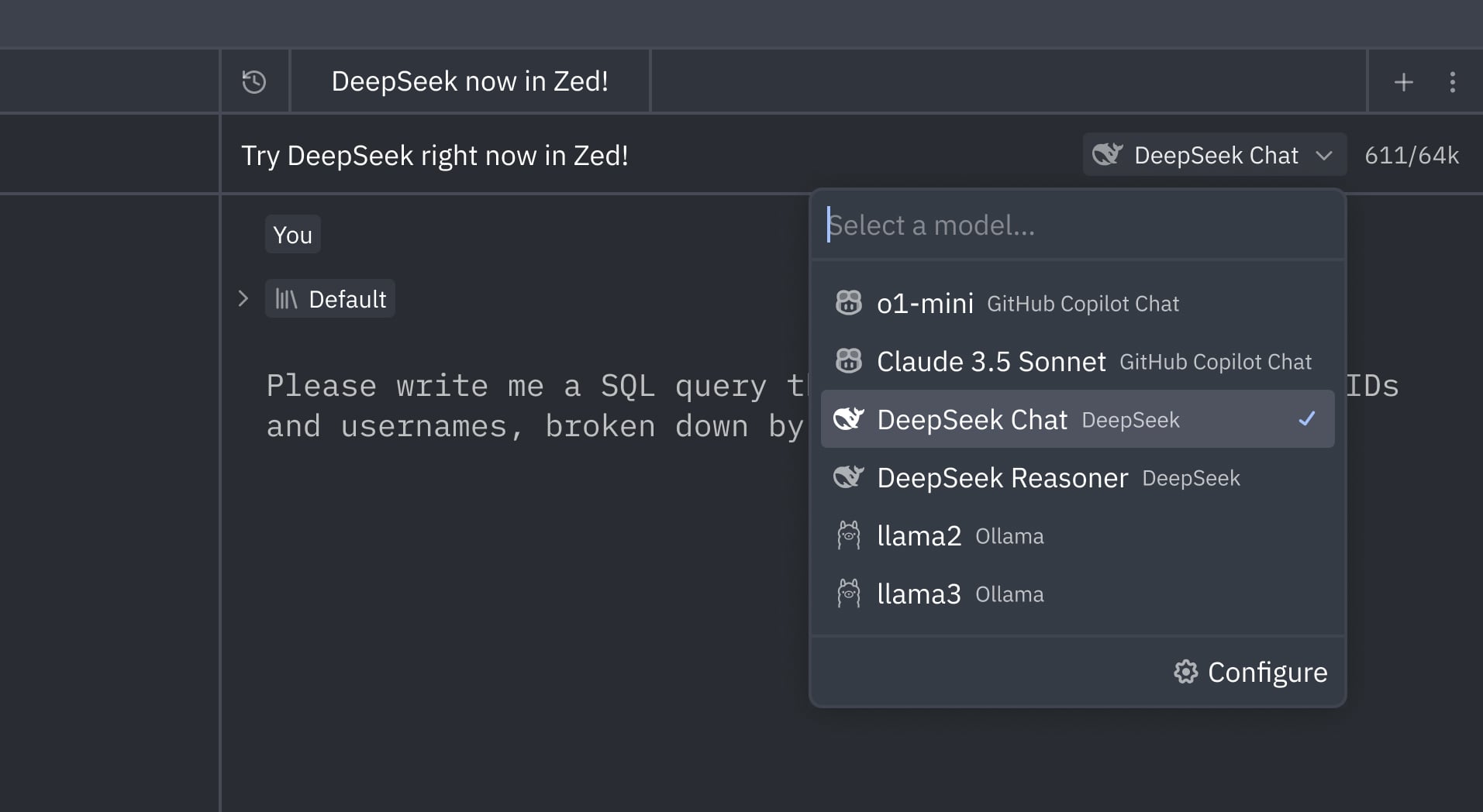

DeepSeek V3 was unexpectedly released not too long ago. 600B. We cannot rule out larger, higher models not publicly launched or introduced, of course. They launched all the mannequin weights for V3 and R1 publicly. The paper says that they tried making use of it to smaller fashions and it didn't work nearly as well, so "base models had been unhealthy then" is a plausible rationalization, however it's clearly not true - GPT-4-base is probably a generally higher (if costlier) model than 4o, which o1 is based on (could be distillation from a secret larger one although); and LLaMA-3.1-405B used a somewhat comparable postttraining process and is about nearly as good a base mannequin, but shouldn't be aggressive with o1 or R1. Is this simply because GPT-four advantages tons from posttraining whereas Free DeepSeek Ai Chat evaluated their base mannequin, or is the model still worse in some exhausting-to-test approach? They've, by far, the perfect mannequin, by far, the perfect entry to capital and GPUs, and they have the perfect folks.

I don’t really see lots of founders leaving OpenAI to start something new because I think the consensus inside the company is that they're by far the best. Building one other one can be one other $6 million and so forth, the capital hardware has already been bought, you are actually just paying for the compute / power. What has changed between 2022/23 and now which suggests we have now a minimum of three decent long-CoT reasoning models round? It’s a robust mechanism that enables AI models to focus selectively on essentially the most related parts of enter when performing duties. We tried. We had some concepts that we wanted folks to depart these firms and start and it’s really laborious to get them out of it. You see a company - individuals leaving to start these sorts of firms - but outdoors of that it’s laborious to persuade founders to depart. There’s not leaving OpenAI and saying, "I’m going to start an organization and dethrone them." It’s sort of loopy.

I don’t really see lots of founders leaving OpenAI to start something new because I think the consensus inside the company is that they're by far the best. Building one other one can be one other $6 million and so forth, the capital hardware has already been bought, you are actually just paying for the compute / power. What has changed between 2022/23 and now which suggests we have now a minimum of three decent long-CoT reasoning models round? It’s a robust mechanism that enables AI models to focus selectively on essentially the most related parts of enter when performing duties. We tried. We had some concepts that we wanted folks to depart these firms and start and it’s really laborious to get them out of it. You see a company - individuals leaving to start these sorts of firms - but outdoors of that it’s laborious to persuade founders to depart. There’s not leaving OpenAI and saying, "I’m going to start an organization and dethrone them." It’s sort of loopy.

You do one-on-one. And then there’s the whole asynchronous part, which is AI agents, copilots that give you the results you want within the background. But then once more, they’re your most senior people because they’ve been there this entire time, spearheading DeepMind and constructing their group. There is much energy in being approximately proper very fast, and it accommodates many clever tricks which are not instantly apparent but are very highly effective. Note that during inference, we instantly discard the MTP module, so the inference prices of the in contrast models are exactly the same. Key innovations like auxiliary-loss-Free DeepSeek Ai Chat load balancing MoE,multi-token prediction (MTP), as properly a FP8 combine precision training framework, made it a standout. I feel like that is just like skepticism about IQ in humans: a kind of defensive skepticism about intelligence/functionality being a driving drive that shapes outcomes in predictable ways. It enables you to go looking the web using the identical form of conversational prompts that you just usually engage a chatbot with. Do they all use the identical autoencoders or one thing? OpenAI not too long ago rolled out its Operator agent, which can effectively use a pc in your behalf - if you happen to pay $200 for the professional subscription.

You do one-on-one. And then there’s the whole asynchronous part, which is AI agents, copilots that give you the results you want within the background. But then once more, they’re your most senior people because they’ve been there this entire time, spearheading DeepMind and constructing their group. There is much energy in being approximately proper very fast, and it accommodates many clever tricks which are not instantly apparent but are very highly effective. Note that during inference, we instantly discard the MTP module, so the inference prices of the in contrast models are exactly the same. Key innovations like auxiliary-loss-Free DeepSeek Ai Chat load balancing MoE,multi-token prediction (MTP), as properly a FP8 combine precision training framework, made it a standout. I feel like that is just like skepticism about IQ in humans: a kind of defensive skepticism about intelligence/functionality being a driving drive that shapes outcomes in predictable ways. It enables you to go looking the web using the identical form of conversational prompts that you just usually engage a chatbot with. Do they all use the identical autoencoders or one thing? OpenAI not too long ago rolled out its Operator agent, which can effectively use a pc in your behalf - if you happen to pay $200 for the professional subscription.

ChatGPT: requires a subscription to Plus or Pro for advanced features. Furthermore, its collaborative features enable groups to share insights simply, fostering a tradition of data sharing inside organizations. With its commitment to innovation paired with highly effective functionalities tailor-made in the direction of person expertise; it’s clear why many organizations are turning in the direction of this leading-edge answer. Developers at main AI companies in the US are praising the DeepSeek Chat AI models that have leapt into prominence whereas also attempting to poke holes in the notion that their multi-billion greenback technology has been bested by a Chinese newcomer's low-price alternative. Why it matters: Between QwQ and DeepSeek, open-supply reasoning models are here - and Chinese companies are completely cooking with new fashions that just about match the present prime closed leaders. Customers immediately are building production-prepared AI functions with Azure AI Foundry, while accounting for his or her varying security, security, and privateness requirements. I believe what has possibly stopped more of that from taking place in the present day is the businesses are still doing nicely, especially OpenAI. 36Kr: What are the important criteria for recruiting for the LLM team?

- 이전글15 Top Documentaries About Evolution Site 25.02.17

- 다음글5 Killer Quora Answers To Gas Safe Buckingham 25.02.17

댓글목록

등록된 댓글이 없습니다.