New Article Reveals The Low Down on Deepseek China Ai And Why You will…

페이지 정보

본문

The extra efficiency comes at the cost of slower and dearer output. It’s a part of an important motion, after years of scaling models by raising parameter counts and amassing bigger datasets, towards attaining high performance by spending extra energy on producing output. While particular coaching knowledge details for DeepSeek are less public, it’s clear that code varieties a big a part of it. McCaffrey famous, "Because new developments in AI are coming so fast, it’s simple to get AI information fatigue. Get began coding in Python with AI Python for Beginners, a 4-half course led by Andrew Ng. Complete the course and bring your ideas to life! The automated scientific discovery course of is repeated to iteratively develop ideas in an open-ended vogue and add them to a growing archive of information, thus imitating the human scientific community. The work stimulated growing interest in natural language processing, together with from the U.S. DeepSeek released its latest large language model, R1, every week ago.

The extra efficiency comes at the cost of slower and dearer output. It’s a part of an important motion, after years of scaling models by raising parameter counts and amassing bigger datasets, towards attaining high performance by spending extra energy on producing output. While particular coaching knowledge details for DeepSeek are less public, it’s clear that code varieties a big a part of it. McCaffrey famous, "Because new developments in AI are coming so fast, it’s simple to get AI information fatigue. Get began coding in Python with AI Python for Beginners, a 4-half course led by Andrew Ng. Complete the course and bring your ideas to life! The automated scientific discovery course of is repeated to iteratively develop ideas in an open-ended vogue and add them to a growing archive of information, thus imitating the human scientific community. The work stimulated growing interest in natural language processing, together with from the U.S. DeepSeek released its latest large language model, R1, every week ago.

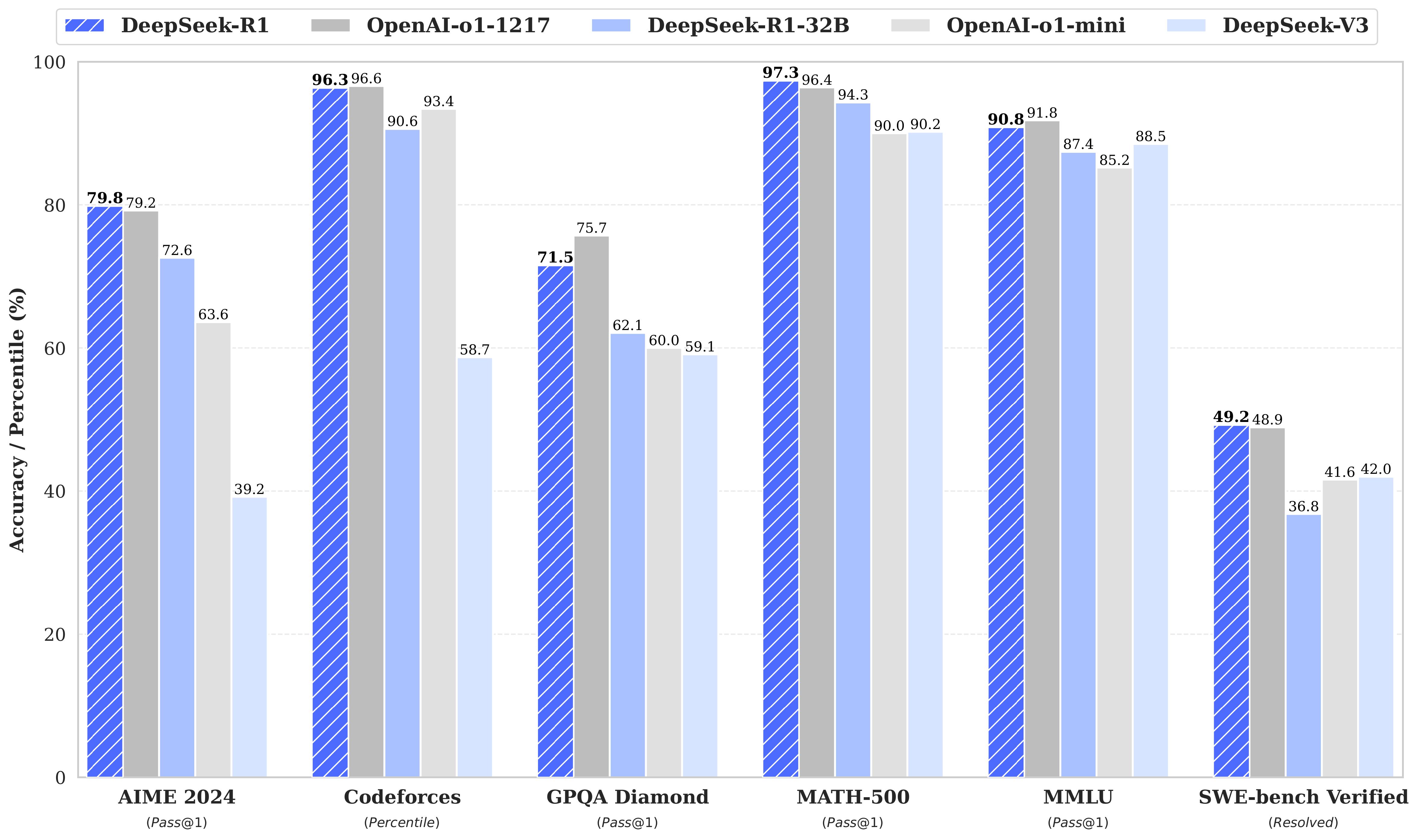

Why it issues: DeepSeek is difficult OpenAI with a aggressive large language mannequin. Large language models have made it potential to command robots using plain English. Scalable watermarking for identifying large language model outputs. PaliGemma comprises SigLIP, a vision transformer that turns photos into embeddings; a linear layer that adapts the picture embeddings to function enter for the pretrained large language mannequin Gemma; and Gemma, which estimates the noise to be faraway from a robotic motion embedding to which noise has been added. Having worked on many software products, I know that, to make good choices, I've to understand the people I hope to serve. Anthropic will contribute to growing Amazon’s Neuron toolkit, software that accelerates deep studying workloads on Trainium and Inferentia chips. Previously Anthropic ran its Claude models on Nvidia hardware; going forward, Anthropic will run them on Amazon’s Inferentia chips, in keeping with The knowledge. Based on DeepSeek, R1-lite-preview, using an unspecified variety of reasoning tokens, outperforms OpenAI o1-preview, OpenAI GPT-4o, Anthropic Claude 3.5 Sonnet, Alibaba Qwen 2.5 72B, and DeepSeek-V2.5 on three out of six reasoning-intensive benchmarks. At the middle of the dispute is a key question about AI’s future: how a lot management ought to corporations have over their very own AI fashions, when those programs were themselves built using data taken from others?

Why it issues: DeepSeek is difficult OpenAI with a aggressive large language mannequin. Large language models have made it potential to command robots using plain English. Scalable watermarking for identifying large language model outputs. PaliGemma comprises SigLIP, a vision transformer that turns photos into embeddings; a linear layer that adapts the picture embeddings to function enter for the pretrained large language mannequin Gemma; and Gemma, which estimates the noise to be faraway from a robotic motion embedding to which noise has been added. Having worked on many software products, I know that, to make good choices, I've to understand the people I hope to serve. Anthropic will contribute to growing Amazon’s Neuron toolkit, software that accelerates deep studying workloads on Trainium and Inferentia chips. Previously Anthropic ran its Claude models on Nvidia hardware; going forward, Anthropic will run them on Amazon’s Inferentia chips, in keeping with The knowledge. Based on DeepSeek, R1-lite-preview, using an unspecified variety of reasoning tokens, outperforms OpenAI o1-preview, OpenAI GPT-4o, Anthropic Claude 3.5 Sonnet, Alibaba Qwen 2.5 72B, and DeepSeek-V2.5 on three out of six reasoning-intensive benchmarks. At the middle of the dispute is a key question about AI’s future: how a lot management ought to corporations have over their very own AI fashions, when those programs were themselves built using data taken from others?

Behind the information: DeepSeek-R1 follows OpenAI in implementing this strategy at a time when scaling laws that predict larger performance from bigger fashions and/or more coaching information are being questioned. Like o1-preview, most of its efficiency positive aspects come from an approach known as check-time compute, which trains an LLM to assume at length in response to prompts, utilizing more compute to generate deeper solutions. On AIME math problems, performance rises from 21 percent accuracy when it makes use of less than 1,000 tokens to 66.7 percent accuracy when it uses more than 100,000, surpassing o1-preview’s efficiency. R1-lite-preview performs comparably to o1-preview on a number of math and drawback-fixing benchmarks. We’re pondering: One of many crew members in contrast π0 to GPT-1 for robotics - an inkling of issues to come. I see so many people in the AI neighborhood constructing things to make the world higher. It is good that persons are researching issues like unlearning, and so forth., for the needs of (amongst other things) making it more durable to misuse open-supply fashions, but the default policy assumption ought to be that each one such efforts will fail, or at best make it a bit costlier to misuse such fashions. Technology stays one of the best ways I do know of to help folks at scale through offering higher education, profession guidance, healthcare, private security, healthier food, or other issues wanted to assist thriving.

While I can attempt to help out individuals right here and there, expertise is advancing quickly, and this gives me a lot of optimism for the long run. Working in AI, I'm fortunate to work together with a lot of the smartest and most capable know-how and business leaders in the world. "Hyperscalers had been losing big on AI, and further down the enterprise chain, companies were cautious about AI but recognised its potential. Tim Cook must be rubbing his fingers with glee that Apple did not rush in with a massive funding in AI, which Microsoft clearly did. As DeepSeek mentions, R1 presents a strong, value-efficient model that permits more users to harness state-of-the-art AI capabilities with minimal infrastructure funding. Revealed in 2021, CLIP (Contrastive Language-Image Pre-training) is a mannequin that is educated to research the semantic similarity between text and images. ChatGPT or the multimodal subliminal messaging with the hidden textual content in the only body of video. Overall, ChatGPT gave one of the best solutions - however we’re still impressed by the level of "thoughtfulness" that Chinese chatbots display.

In case you have any kind of concerns concerning wherever as well as tips on how to make use of شات DeepSeek, you'll be able to call us at our own web site.

- 이전글Need More Time? Read These Tips to Eliminate Craigslist Sell My Car 25.02.11

- 다음글Cracking The How To Bet On Oscars In Canada Secret 25.02.11

댓글목록

등록된 댓글이 없습니다.