The Way to Learn Deepseek

페이지 정보

본문

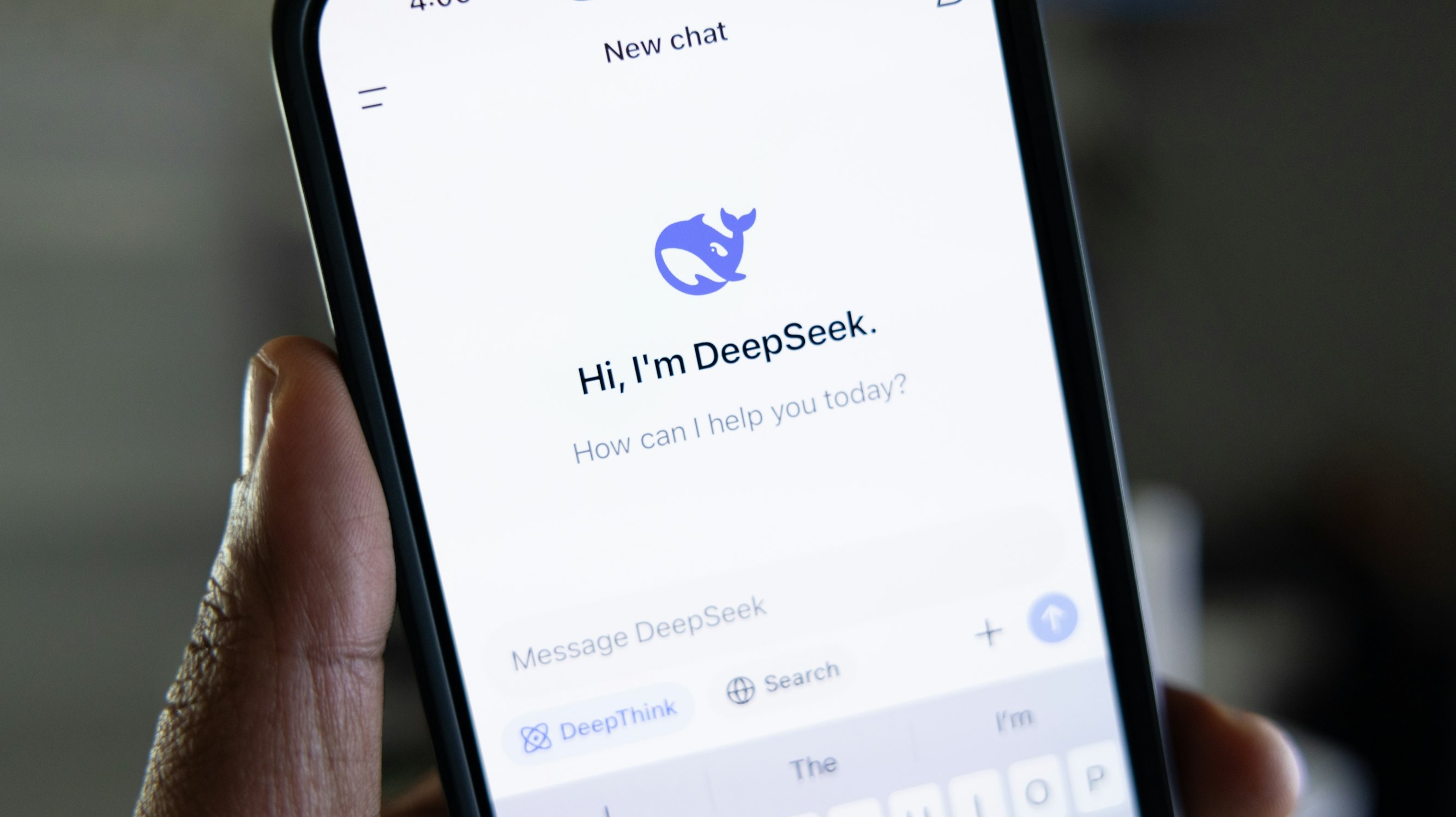

DeepSeek API employs advanced AI algorithms to interpret and execute complex queries, delivering correct and contextually related outcomes throughout structured and unstructured data. In conclusion, as businesses increasingly rely on large volumes of knowledge for determination-making processes; platforms like DeepSeek are proving indispensable in revolutionizing how we discover info efficiently. Nvidia has launched NemoTron-four 340B, a family of models designed to generate synthetic information for coaching massive language models (LLMs). Resource Optimization: Achieved outcomes with 2.78 million GPU hours, considerably decrease than Meta’s 30.Eight million GPU hours for similar-scale models. Our evaluation outcomes demonstrate that DeepSeek LLM 67B surpasses LLaMA-2 70B on varied benchmarks, significantly in the domains of code, mathematics, and reasoning. It surpasses proprietary fashions like OpenAI's o1 reasoning model across a wide range of benchmarks, including math, science, and coding, at a fraction of its improvement price. This exceptional efficiency, combined with the availability of DeepSeek Free, a version offering free entry to certain options and models, makes DeepSeek accessible to a wide range of customers, from college students and hobbyists to professional developers.

DeepSeek API employs advanced AI algorithms to interpret and execute complex queries, delivering correct and contextually related outcomes throughout structured and unstructured data. In conclusion, as businesses increasingly rely on large volumes of knowledge for determination-making processes; platforms like DeepSeek are proving indispensable in revolutionizing how we discover info efficiently. Nvidia has launched NemoTron-four 340B, a family of models designed to generate synthetic information for coaching massive language models (LLMs). Resource Optimization: Achieved outcomes with 2.78 million GPU hours, considerably decrease than Meta’s 30.Eight million GPU hours for similar-scale models. Our evaluation outcomes demonstrate that DeepSeek LLM 67B surpasses LLaMA-2 70B on varied benchmarks, significantly in the domains of code, mathematics, and reasoning. It surpasses proprietary fashions like OpenAI's o1 reasoning model across a wide range of benchmarks, including math, science, and coding, at a fraction of its improvement price. This exceptional efficiency, combined with the availability of DeepSeek Free, a version offering free entry to certain options and models, makes DeepSeek accessible to a wide range of customers, from college students and hobbyists to professional developers.

DeepSeek's downloadable mannequin exhibits fewer indicators of built-in censorship in distinction to its hosted fashions, which seem to filter politically sensitive matters like Tiananmen Square. The Feroot Security researchers claim the computer code hidden in the web site grabs the person login credentials throughout DeepSeek's account creation and consumer login course of. Upon further evaluation and testing, both sets of safety researchers had been unable to tell whether the pc code was used to switch person data to the Chinese authorities when testing logins in North America. Now, a damning report suggests DeepSeek's web site features computer code that would share consumer login information with a Chinese telecommunications company despite being barred from working its operations in the United States (via The Associated Press). While we won't independently corroborate Feroot Security's findings, The Associated Press shared the report with one other group of safety experts, who confirmed the presence of the malicious code in DeepSeek's code. You’ll have privateness (no cloud storage) and a fast solution to integrate R1 into your code or tasks. Security and safety remain major setbacks that have forced most customers to maintain generative AI at arm's size. Looking forward, we will anticipate much more integrations with emerging technologies such as blockchain for enhanced safety or augmented actuality applications that would redefine how we visualize information.

DeepSeek's downloadable mannequin exhibits fewer indicators of built-in censorship in distinction to its hosted fashions, which seem to filter politically sensitive matters like Tiananmen Square. The Feroot Security researchers claim the computer code hidden in the web site grabs the person login credentials throughout DeepSeek's account creation and consumer login course of. Upon further evaluation and testing, both sets of safety researchers had been unable to tell whether the pc code was used to switch person data to the Chinese authorities when testing logins in North America. Now, a damning report suggests DeepSeek's web site features computer code that would share consumer login information with a Chinese telecommunications company despite being barred from working its operations in the United States (via The Associated Press). While we won't independently corroborate Feroot Security's findings, The Associated Press shared the report with one other group of safety experts, who confirmed the presence of the malicious code in DeepSeek's code. You’ll have privateness (no cloud storage) and a fast solution to integrate R1 into your code or tasks. Security and safety remain major setbacks that have forced most customers to maintain generative AI at arm's size. Looking forward, we will anticipate much more integrations with emerging technologies such as blockchain for enhanced safety or augmented actuality applications that would redefine how we visualize information.

But is the essential assumption right here even true? Developed by a Chinese AI company, DeepSeek has garnered significant consideration for its high-performing fashions, comparable to DeepSeek-V2 and DeepSeek-Coder-V2, which consistently outperform industry benchmarks and even surpass renowned fashions like GPT-four and LLaMA3-70B in particular tasks. "The earlier Llama fashions were nice open fashions, but they’re not fit for advanced issues. DeepSeek-R1 and its associated models characterize a new benchmark in machine reasoning and huge-scale AI efficiency. Problem-Solving: DeepSeek’s R1 model showcases advanced self-evolving reasoning capabilities, permitting for extra autonomous downside-solving. "It’s mindboggling that we are unknowingly allowing China to survey Americans and we’re doing nothing about it. For context, the US restricted DeepSeek operations, citing shut ties between China Mobile and the Chinese navy. While the Chinese AI startup admits that it stores person information in its privacy coverage documentation, this new report reveals intricate details about DeepSeek's close ties to China than beforehand thought. However, a separate report suggests DeepSeek spent $1.6 billion to develop its AI mannequin, and never $6 million as previously thought. R1-Zero, nevertheless, drops the HF half - it’s simply reinforcement studying. However, this doesn't solely rule out the prospect that person knowledge was shared with the Chinese telecommunication firm.

DeepSeek may very well be sharing person information with the Chinese authorities with out authorization despite the US ban. Despite being one in all the numerous corporations that educated AI models in the past couple of years, DeepSeek is one of the very few that managed to get worldwide attention. Over the previous few years, there have been a number of situations where person knowledge has been used to train AI models with out authorization, in the end breaching person belief and more. Remove it if you do not have GPU acceleration. I've been playing with with it for a couple of days now. Please note: Within the command above, exchange 1.5b with 7b, 14b, 32b, 70b, or 671b if your hardware can handle a larger mannequin. Be happy to start out small (1.5B parameters) and transfer to a bigger version later in the event you need more energy. Welcome to DeepSeek Free! This repo contains AWQ model recordsdata for DeepSeek's Deepseek Coder 33B Instruct. Run the app to see a local webpage the place you may add recordsdata and chat with R1 about their contents. Ollama will obtain the required information and begin DeepSeek R1 regionally. Search for an "Install" or "Command Line Tools" choice within the Ollama app interface.

If you have any concerns regarding exactly where and how to use ديب سيك شات, you can get hold of us at the webpage.

- 이전글γυναίκα Εισαγγελέα επίδομα γλυκα βολος Απολογούνται μάνα και κόρη για τη φρικιαστική υπόθεση ομηρίας τυφλής γυναίκας 25.02.07

- 다음글Five Super Useful Tips To Improve Betting Sites Make.millions 25.02.07

댓글목록

등록된 댓글이 없습니다.