5 Days To A better Deepseek

페이지 정보

본문

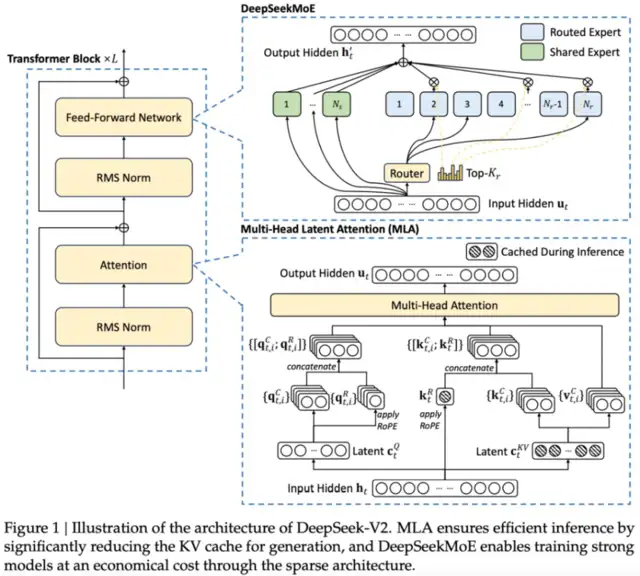

The DeepSeek Coder ↗ models @hf/thebloke/deepseek-coder-6.7b-base-awq and @hf/thebloke/deepseek-coder-6.7b-instruct-awq at the moment are out there on Workers AI. Fortunately, these limitations are expected to be naturally addressed with the development of more advanced hardware. However, in more general situations, constructing a feedback mechanism by means of hard coding is impractical. During the event of DeepSeek-V3, for these broader contexts, we make use of the constitutional AI strategy (Bai et al., 2022), leveraging the voting analysis outcomes of DeepSeek-V3 itself as a feedback source. We consider that this paradigm, which combines supplementary information with LLMs as a feedback supply, is of paramount significance. The LLM serves as a versatile processor capable of remodeling unstructured data from numerous scenarios into rewards, ultimately facilitating the self-improvement of LLMs. In addition to straightforward benchmarks, we also evaluate our models on open-ended era tasks using LLMs as judges, with the results proven in Table 7. Specifically, we adhere to the original configurations of AlpacaEval 2.Zero (Dubois et al., 2024) and Arena-Hard (Li et al., 2024a), which leverage GPT-4-Turbo-1106 as judges for pairwise comparisons. Similarly, DeepSeek-V3 showcases exceptional efficiency on AlpacaEval 2.0, outperforming each closed-source and open-supply fashions. On FRAMES, a benchmark requiring query-answering over 100k token contexts, DeepSeek-V3 intently trails GPT-4o while outperforming all different models by a major margin.

The DeepSeek Coder ↗ models @hf/thebloke/deepseek-coder-6.7b-base-awq and @hf/thebloke/deepseek-coder-6.7b-instruct-awq at the moment are out there on Workers AI. Fortunately, these limitations are expected to be naturally addressed with the development of more advanced hardware. However, in more general situations, constructing a feedback mechanism by means of hard coding is impractical. During the event of DeepSeek-V3, for these broader contexts, we make use of the constitutional AI strategy (Bai et al., 2022), leveraging the voting analysis outcomes of DeepSeek-V3 itself as a feedback source. We consider that this paradigm, which combines supplementary information with LLMs as a feedback supply, is of paramount significance. The LLM serves as a versatile processor capable of remodeling unstructured data from numerous scenarios into rewards, ultimately facilitating the self-improvement of LLMs. In addition to straightforward benchmarks, we also evaluate our models on open-ended era tasks using LLMs as judges, with the results proven in Table 7. Specifically, we adhere to the original configurations of AlpacaEval 2.Zero (Dubois et al., 2024) and Arena-Hard (Li et al., 2024a), which leverage GPT-4-Turbo-1106 as judges for pairwise comparisons. Similarly, DeepSeek-V3 showcases exceptional efficiency on AlpacaEval 2.0, outperforming each closed-source and open-supply fashions. On FRAMES, a benchmark requiring query-answering over 100k token contexts, DeepSeek-V3 intently trails GPT-4o while outperforming all different models by a major margin.

In engineering duties, DeepSeek-V3 trails behind Claude-Sonnet-3.5-1022 however significantly outperforms open-source models. The open-source DeepSeek-V3 is predicted to foster advancements in coding-associated engineering duties. The effectiveness demonstrated in these particular areas indicates that long-CoT distillation might be valuable for enhancing model performance in other cognitive duties requiring complicated reasoning. Notably, it surpasses DeepSeek-V2.5-0905 by a big margin of 20%, highlighting substantial improvements in tackling easy duties and showcasing the effectiveness of its advancements. On the instruction-following benchmark, DeepSeek-V3 significantly outperforms its predecessor, DeepSeek-V2-series, highlighting its improved capability to understand and adhere to person-outlined format constraints. Additionally, the judgment capacity of DeepSeek-V3 may also be enhanced by the voting approach. The ability to make innovative AI just isn't restricted to a choose cohort of the San Francisco in-group. This excessive acceptance charge permits DeepSeek-V3 to attain a considerably improved decoding pace, delivering 1.Eight times TPS (Tokens Per Second). Combined with the framework of speculative decoding (Leviathan et al., 2023; Xia et al., 2023), it could considerably speed up the decoding velocity of the mannequin.

Table 8 presents the efficiency of these fashions in RewardBench (Lambert et al., 2024). DeepSeek-V3 achieves performance on par with one of the best variations of GPT-4o-0806 and Claude-3.5-Sonnet-1022, whereas surpassing other versions. Our analysis suggests that information distillation from reasoning models presents a promising course for submit-training optimization. The manifold perspective also suggests why this might be computationally efficient: early broad exploration happens in a coarse space where precise computation isn’t wanted, whereas expensive high-precision operations solely occur within the reduced dimensional space where they matter most. Further exploration of this approach across totally different domains stays an necessary route for future research. While our current work focuses on distilling information from arithmetic and coding domains, this approach exhibits potential for broader applications throughout varied activity domains. Brass Tacks: How Does LLM Censorship Work? I did work with the FLIP Callback API for fee gateways about 2 years prior. After you have obtained an API key, you can entry the DeepSeek API utilizing the following example scripts. Then the knowledgeable models were RL utilizing an unspecified reward perform. The baseline is skilled on short CoT information, deepseek whereas its competitor uses data generated by the knowledgeable checkpoints described above. PPO is a trust area optimization algorithm that makes use of constraints on the gradient to make sure the update step does not destabilize the educational course of.

If you want to read more information on ديب سيك look at our page.

- 이전글Believing Any Of these 10 Myths About Learn More Business And Technology Consulting Retains You From Growing 25.02.02

- 다음글7slots Casino İncelemesi - Basit ve Güçlü Bir Kumar Deneyimi 25.02.02

댓글목록

등록된 댓글이 없습니다.